By Yi Chen, Altair

Whether a CFD solution uses a finite element, finite volume, or finite difference computational method, achieving optimal scalability on the latest HPC hardware is the ultimate quest. This blog summarizes some of the results presented at the Supercomputing 2020 conference on GPU acceleration of Altair AcuSolve™.

AcuSolve is a powerful general-purpose computational fluid dynamics (CFD) solver that provides a full range of physical models to analyze fluid and thermal systems. It allows engineers and designers to quickly analyze and understand fluid and thermal effects on real world engineering applications using high-performance numerical simulations. AcuSolve is an implicit steady-state and transient solver based on the stabilized Galerkin Least Finite Element method. It has proven success in large-scale industrial applications for its accuracy, efficiency, and robustness. Focus areas for the product include thermal management, multiphase flows and internal/external aerodynamics.

Sparse Linear Solver of AcuSolve

Implicit finite element algorithms usually need to repeatedly solve large-scale sparse linear systems with tens or hundreds of millions of degrees of freedom. The sparse linear solver is a critical part of AcuSolve and is the most time-consuming part for steady-state simulations, taking up to 60 to 80 percent of the total runtime on CPUs. The linear solvers, with mathematically rich foundations, are based on general CG/GRMRES/GCRO solvers but are highly customized for fluid dynamics problems. Implementation-wise, they can be broken down into basic linear algebra operations on sparse and dense matrices and vectors.

CPU Scalability

In terms of the scalability of computing, large-scale linear algebra operations are memory-bound instead of CPU-bound. The effect of only adding CPU core counts to speed up the sparse linear solver on a workstation quickly diminishes when the memory channels are limited. We used the widely adopted Stream program to benchmark the memory bandwidth of a workstation that has 32 CPU cores with spread-type core binding. As shown in the following chart, adding more cores does not increase the memory bandwidth.

GPU Scalability

Compared to CPUs, GPUs by design offer much larger memory bandwidth, making them an ideal choice for memory-bound applications like the sparse linear solver. As such, it was an obvious choice for us to port the linear solver in AcuSolve to the AMD Radeon™ Pro VII graphics card, which can help reduce the runtime significantly. With 16GB of high-speed HBM2 memory, up to 1024 GB/s of memory bandwidth, and support for up to six UHD display outputs, the graphics card delivers exceptional performance in deep learning and HPC workflows, so we have chosen this graphics card for our benchmarking.

We first benchmarked basic linear algebra operations such as matrix-vector multiplications for square large sparse matrices and dense tall and skinny matrices. As the following chart shows, the GPU delivers impressive performance increases. Therefore, we can expect considerable speedup of the sparse linear solvers in which those basic linear algebra operations serve as building blocks.

ROCm™/HIP

One challenge of porting the linear solver in AcuSolve to GPUs is that in the legacy CPU code the data structure is highly hierarchical and physically segmented, which is unsuitable for the data parallelization on GPUs, thus a complete redesign of the data structure has been implemented. After this effort, the algorithms have been broken down into building blocks of basic linear algebra operations, which directly utilize the APIs provided by the AMD ROCm™ open software platform.

Built for flexibility and performance, AMD ROCm gives machine learning (ML) and HPC application developers access to an array of different open compute languages, compilers, libraries and tools. These are designed from the ground up to meet the most demanding needs – helping to accelerate code development and solve the toughest challenges in today’s complex world. ROCm APIs including rocSparse, rocBlas, rocSolver are used extensively in the linear solver of AcuSolve.

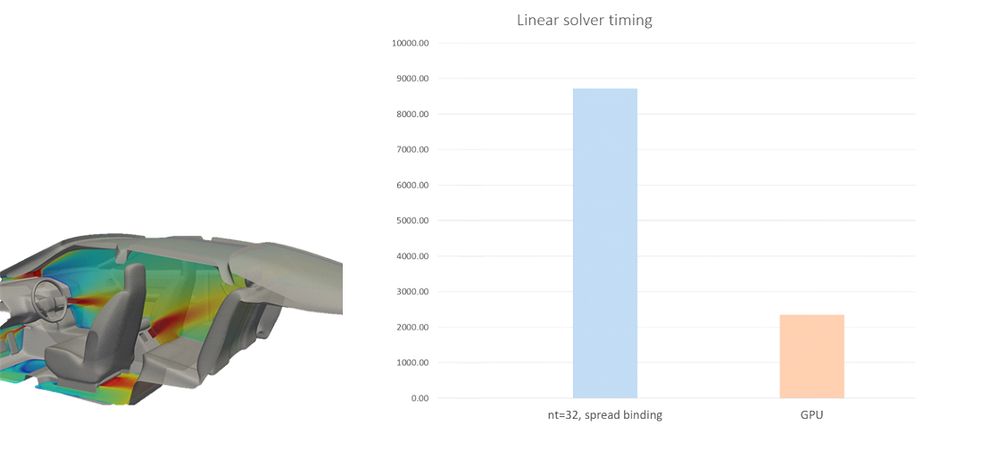

Speedup Result

After the complete linear solver was built for GPUs, we observed an impressive speedup. As the follow charts show, the AMD Radeon™ Pro VII graphics card can deliver 3 to 5 times the speedup compared to 32 CPU cores.

Summary

Altair AcuSolve is currently supported by ROCm 3.8 and targets three generations of AMD Radeon Instinct™ and AMD Instinct™ accelerators. With the help of HIP utilities, the source code was ported in less than a month to the AMD ROCm platform. We encourage more people to start exploring and implementing with HIP/ROCm to leverage the computational power of the AMD Radeon Pro VII graphics cards and AMD Instinct™ GPUs for their HPC programs.

For more information about the Altair AcuSolve presentation at SC20, please visit:

https://www.altair.com/resource/gpu-acceleration-of-altair-acusolve

Altair AcuSolve has proven success in large-scale fluid and thermal applications. For more information about AcuSolve, please visit https://www.altair.com/acusolve/.

Yi Chen is Senior CFD Development Manager at Altair. His postings are his own opinions and may not represent AMD’s positions, strategies or opinions. Links to third party sites are provided for convenience and unless explicitly stated, AMD is not responsible for the contents of such linked sites and no endorsement is implied.

DISCLAIMER

The information contained herein is for informational purposes only, and is subject to change without notice. While every precaution has been taken in the preparation of this document, it may contain technical inaccuracies, omissions and typographical errors, and AMD is under no obligation to update or otherwise correct this information. Advanced Micro Devices, Inc. makes no representations or warranties with respect to the accuracy or completeness of the contents of this document, and assumes no liability of any kind, including the implied warranties of noninfringement, merchantability or fitness for particular purposes, with respect to the operation or use of AMD hardware, software or other products described herein. No license, including implied or arising by estoppel, to any intellectual property rights is granted by this document. Terms and limitations applicable to the purchase or use of AMD’s products are as set forth in a signed agreement between the parties or in AMD’s Standard Terms and Conditions of Sale.

AMD, the AMD Arrow logo, Instinct, Radeon Instinct, Radeon, ROCm and combinations thereof are trademarks of Advanced Micro Devices, Inc. Other product names used in this publication are for identification purposes only and may be trademarks of their respective companies.

*Testing as of Nov 2020 by Altair labs on a production test system comprised CentOS 8.2 for Workstations, 64-bit, 64-core CPU, 256GB HBM2 RAM, AMD Radeon™ Pro VII, AMD Radeon™ Software for Enterprise 20.Q4 Pre-release version.