A new front has opened in China’s high-stakes AI race. Shanghai-based startup MiniMax has released MiniMax-M1, a powerful open-weight reasoning model that mounts a direct and multi-pronged challenge to the presumed dominance of its domestic rival, DeepSeek. The move escalates the regional competition from a battle of benchmarks to a more complex war fought over performance, cost-efficiency, and the very definition of “open source.”

In a strategic launch, MiniMax is positioning its M1 model as a superior alternative for developers. According to a report from The Register, the company is explicitly aiming to supplant DeepSeek as the industry’s key disruptor. Until this week, DeepSeek’s upgraded R1-0528 model was widely seen as China’s leading open-source contender.

MiniMax, however, claims in a blog post that M1 not only approaches the capabilities of top-tier Western systems but does so with greater efficiency and under a more permissive license.

This development signals a maturation of the AI ecosystem outside Silicon Valley, where the terms of engagement now include legal assurances and ethical positioning alongside raw technical power. For global developers and enterprises, the rivalry promises more powerful and accessible tools, but it also highlights the growing complexity of navigating a landscape shaped by intense competition and geopolitical pressures.

A Battle of Benchmarks and Architecture

On paper, MiniMax-M1 presents a formidable challenge through clever engineering. The model’s official GitHub repository details a hybrid Mixture-of-Experts (MoE) architecture that supports a one-million-token context window—eight times the capacity of DeepSeek’s R1.

This allows it to process vastly more information at once. While both models use the efficiency-boosting MoE technique, MiniMax claims its proprietary “Lightning Attention” mechanism and a novel reinforcement learning algorithm called CISPO are key differentiators.

This architecture translates into significant cost savings. According to the official technical paper this design is the foundation of the model’s efficiency. “Compared with DeepSeek … this substantial reduction in computational cost makes M1 significantly more efficient during both inference and large-scale [model] training.”

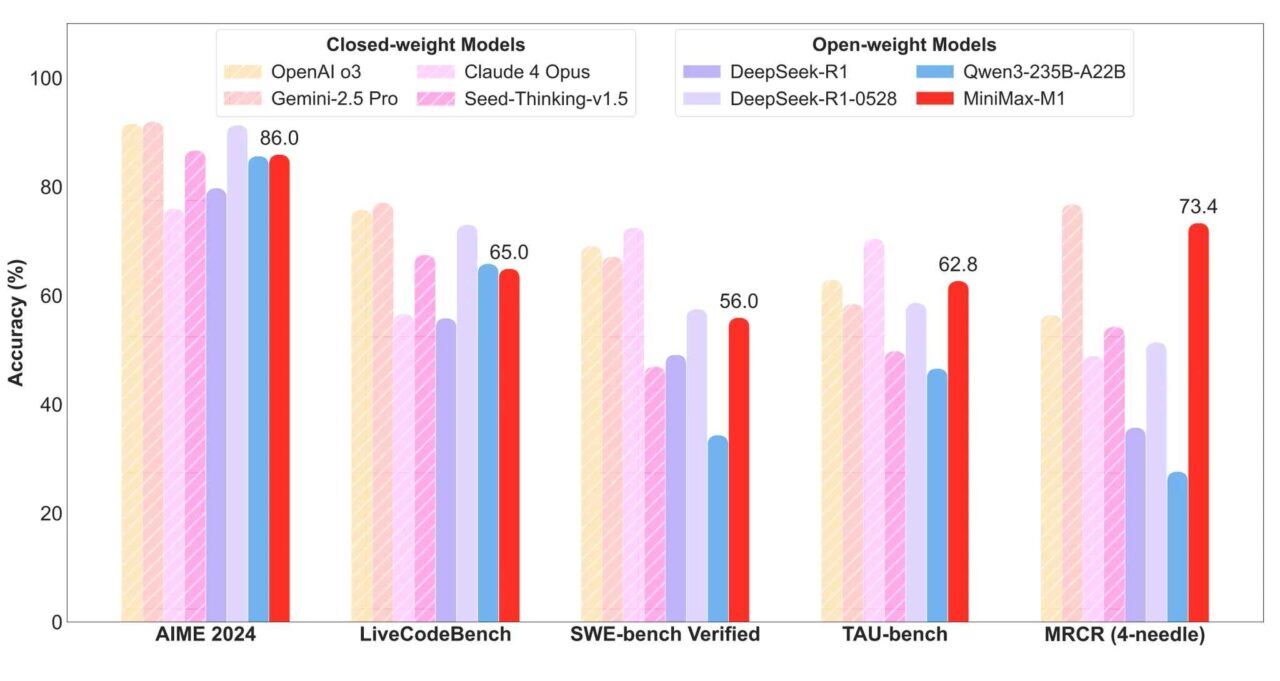

MiniMax asserts that for complex reasoning tasks, M1 requires only about 30 percent of the computing power of DeepSeek R1. While self-reported benchmarks show a nuanced picture—with DeepSeek maintaining a slight edge in some coding tests—M1 appears to pull ahead in long-context reasoning tasks, a critical capability for sophisticated applications.

More Than a License: The Open-Source Gambit

Perhaps MiniMax’s most strategic move is its choice of license. The company released M1 under an Apache 2.0 license, which it pointedly frames as “actually open source.” This is a deliberate jab at competitors like Meta, whose Llama models use a restrictive community license that the Open Source Initiative argues is not truly open source, and even DeepSeek, which is only partially under an open-source license.

The distinction is more than philosophical; it has significant legal and commercial implications. The Apache 2.0 license provides a crucial advantage for AI development, as it also includes patent grants, which can be important for AI models. This explicit patent grant offers users stronger protection against potential infringement lawsuits, a critical consideration for enterprises looking to build commercial products on top of an open-weight model. By offering this legal clarity, MiniMax is making a calculated play to be seen as the safer, more business-friendly choice.

A Crown Under Siege: Distillation and Distrust

MiniMax’s challenge could not have come at a more opportune moment, as DeepSeek’s leadership has been clouded by controversy. The company has been grappling with a series of damaging allegations regarding its training data.

Speculation first arose around the use of OpenAI’s models, that DeepSeek’s latest model may have been trained on data from Google’s Gemini. This practice violates the terms of service of most major AI labs. The allegations are compounded by intense geopolitical pressure.

In April, a US House Select Committee on the CCP labeled DeepSeek a national security risk, with Chairman John Moolenaar issuing a stark warning. “DeepSeek isn’t just another AI app — it’s a weapon in the Chinese Communist Party’s arsenal, designed to spy on Americans, steal our technology, and subvert U.S. law.”

Some experts, like AI researcher Nathan Lambert, have suggested that for a company facing GPU shortages due to US sanctions, distillation is a logical, if risky, shortcut to stay competitive.

If I was DeepSeek I would definitely create a ton of synthetic data from the best API model out there. Theyre short on GPUs and flush with cash. It’s literally effectively more compute for them. yes on the Gemini distill question.

— Nathan Lambert (@natolambert) June 3, 2025

A Global Race Fraught With Hurdles

While the drama unfolds in China, the global AI race is proving arduous for everyone. The challenges faced by DeepSeek and the competitive pressure from MiniMax are mirrored in the West, where even the most well-funded tech giants are hitting development roadblocks.

In a significant setback, Meta was forced to postpone its flagship Llama 4 Behemoth model in May due to performance issues. This industry-wide struggle suggests that the era of easy, rapid advancements may be ending. As NYU assistant professor Ravid Shwartz-Ziv observed, “the progress is quite small across all the labs, all the models.”

Looking ahead, the competitive differentiator may shift entirely. A recent PwC report on AI trends suggests that as foundational models become commoditized, the key to success will lie not in having the best off-the-shelf model, but in how effectively companies combine these powerful tools with their own proprietary data and institutional knowledge.

MiniMax’s emergence underscores a shift in the AI industry. The challenge to DeepSeek is not merely about performance metrics but is a broader contest of efficiency, legal strategy, and perceived trustworthiness. As the global race continues to accelerate, this multi-front competition in China demonstrates that building a dominant AI model now requires more than just code—it demands a mastery of the complex interplay between technology, ethics, and commerce.