The combination of Artificial Intelligence (AI) and Neuroscience is still an exciting domain for scientific research. The study of human cognition intersects with intelligent machine development, catalyzing advances for both fields. This symbiotic relationship has the potential to revolutionize our understanding of cognition and develop more accurate diagnostics/ treatments for neurological diseases.

Artificial Intelligence is a discipline in computer science that pertains to the development of machines that can emulate human intelligence. AI has successfully been deployed across domains such as medical diagnostics or natural language processing.

Advancements in hardware have driven technological shifts toward machine learning development to deep learning methods. Sustainable neuromorphic architecture use of organic neural structures draws attention to the development of efficient computing which leads to another technical breakthrough.

Neuroscience is the umbrella term under which all aspects of studying the brain and nervous system fall. These aspects include physiology, anatomy, psychology and even computer science. Neuroscience provides us with the means to understand brain function, and thereby insights into their implementation using AI algorithms. In contrast, AI is used in neuroscience research to analyze vast amounts of data related to brain functionality and pathology.

This article endeavors to delve into the interdependent companionship between Artificial Intelligence (AI) and neuroscience.

- Basic Understanding of AI Concepts: Familiarity with machine learning, neural networks, and computational modeling.

- Interdisciplinary Approach: Interest in connecting biological principles with computational techniques.

- Analytical Skills: Ability to critically analyze scientific literature and emerging technologies.

Artificial Neural Networks (ANNs) have changed AI forever, providing machines the ability to perform tasks that would normally require human intelligence. These mimic the architecture and actions of neurobiological networks, roughly replicating how neurons interact to process information in a brain.

Although ANNs have been successful in a plethora of tasks, the relationship between ANN and neuroscience could give us deeper insights about artificial and biological intelligence.

The Basics of Artificial Neural Networks

An artificial neural network is a collection of interconnected artificial nodes, often referred to as neurons or units. Given a set of neurons, these will process the incoming data by stepping through different layers that perform mathematical operations to derive useful insight and make predictions.

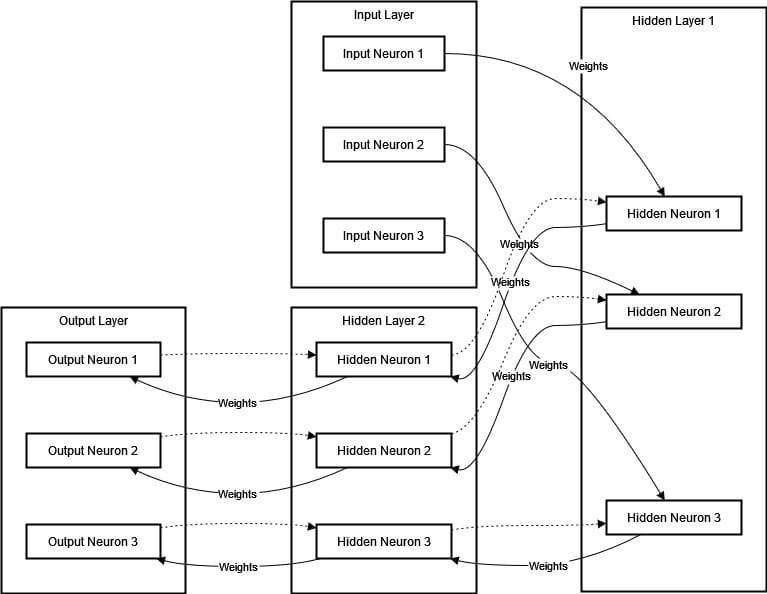

An ANN has multiple layers including an input layer, one or several hidden layers, and an output layer. The links that connect the neurons are called weights. These weights get adjusted during training to minimize the difference between our predicted result and actual output. The network learns from the data through a called backpropagation, where it repeatedly adjusts itself by moving back and forth through the layers. We can represent an Artificial Neural Network (ANN) in the following diagram:

Structure and learning of an artificial neural network

The diagram above represents an Artificial Neural Network (ANN) with one input layer, two hidden layers, and one output layer. Input data flows through the hidden layers to the output layer. Each connection has a weight that is adjusted in the training phase. Backpropagation is represented as dashed lines, indicating the of weight adjustment to minimize errors.

The Brain’s Neural Networks

The human brain is a cluster of billions of neurons, responsible for creating the nervous system. Neurons communicate through electrical and chemical signals, many of which arise from complex networks. These networks allow the brain to encode information, make decisions, and direct behavior. Neurons receive input from other neurons through their dendrites, that information in the cell body (also known as soma), and then send signals to downstream neurons via axons.

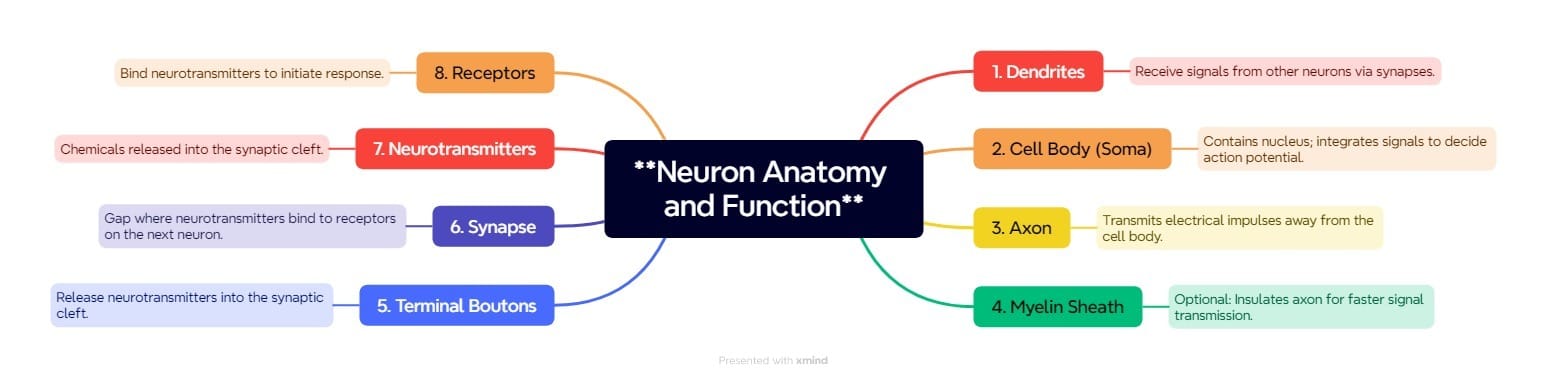

Neural networks in the brain are highly dynamic and can learn from experience, store information relevant to new situations, or recover function if damaged. Neuroplasticity is the capacity of the brain to reorganize itself in response to changes in the environment. The diagram below illustrates a neuron signal transmission pathway.

Neuron signal transmission pathway

The functional anatomy of a neuron is shown in the diagram above to illustrate its components and their functions during signal transmission. Dendrites function like antennas for neurons, receiving signals from other neurons via synaptic connection. The Cell Body (Soma) consolidates these signals and, with the help of its nucleus decides if it should create an action potential.

The Axon sends electrical signals away from the cell body to communicate with other neurons, muscles, or glands.

Myelin Sheath (optional) insulates axon for faster signal transmission. At the terminus of axon, terminal boutons release neurotransmitters in a Synaptic Cleft that bind to receptors on the dendrites of the receiving neuron. Neurotransmitters are chemical messengers released into the synaptic cleft. Receptors on receiving neuron’s dendrites bind neurotransmitters to trigger and move signals further.

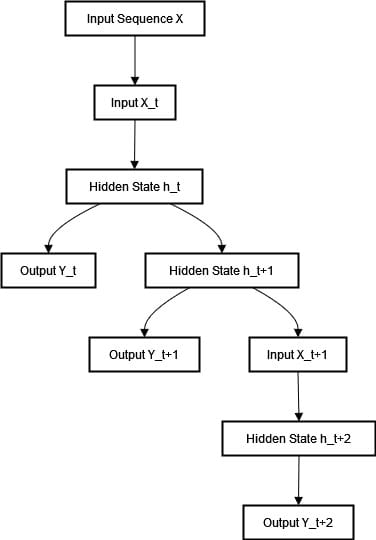

Recurrent Neural Networks (RNNs) store information in their hidden states to pull out the patterns in data sequences. Unlike feedforward neural networks, RNNs an input sequence one step at a time. Using earlier inputs to affect current outputs is ideal for tasks such as language modeling and time series forecasting. The flow in a Recurrent Neural Network is described in the flowchart below:

Flow within a Recurrent Neural Network

In the diagram abobe, the input sequence X is introduced to the network as Input X_t, which the RNN uses to update its hidden state to Hidden State h_t. Based on this hidden state, the RNN generates an output, Output Y_t. As the sequence progresses, the hidden state is updated to Hidden State h_t+1 with the next input Input X_t+1. This updated hidden state then evolves to Hidden State h_t+2 as the RNN es each subsequent input, continuously producing outputs for each time step in the sequence.

Memory in neuroscience consists of acquiring, storing, retaining and recalling information. It can be divided into short-term (working memory) and long-term memory, with the hippocampus playing an important role in declarative memories. The strength of synapses changing through synaptic plasticity is essential for the brain’s learning and memory.

RNNs borrow heavily from the brain’s memory functions and employ hidden states to carry information across time, thus approximating neural feedback loops. Both systems adjust their behavior based on past information, with RNNs using learning algorithms to modify their weights and enhance performance on sequential tasks.

Convolutional Neural Networks, which have transformed artificial intelligence, draw inspiration from nature’s paradigm. Through their multi-layered analysis of visual inputs, CNNs detect significant patterns in ways analogous to the human brain’s hierarchical approach.

Their distinctive architecture emulates how our brains extract abstract representations of the world through filtering and pooling operations across the visual cortex. Just as CNN’s success reshaped machine perception, comparing them to the brain enhances our understanding of artificial and biological vision.

Brain Visual ing System

The human brain is a powerful or of visual information. Located in front of the brain, the visual cortex is particularly responsible for ing visual information, utilizing a hierarchical structure of neurons to achieve this. These neurons are set up in layers, each layer dealing with different aspects of the visual scene, from simple edges and textures to complete shapes and objects.

In the preliminary stages, neurons in the visual cortex respond to simple stimuli like lines and edges. As visual information moves through successive layers, neurons integrate these basic features into complex representations and ultimately object recognition.

The Architecture of Convolutional Neural Networks

CNNs aim to replicate this approach, and the resulting network is an architecture with multiple layers. Convolutional layers, pooling layers, and fully connected layers are different types of layers in a CNN. They are responsible for detecting the specific features in the input image, where each layer passes its outputs on to the following layers. Let’s consider each one of them:

- Convolutional Layers: These layers use filters to “look” at the input image and detect local features like edges or textures. Every filter behaves like a receptive field and captures the local region in input data, much like the receptive fields of neurons in the visual cortex.

- Pooling Layers: These layers reduce the spatial dimensions of the data while still preserving critical characteristics. It is a nice approximation to the fact that human brains can condense and prioritize visual information.

- Fully Connected Layers: In the final stages, the last layers integrate all detected features. This approach is similar to how our brains integrate complex features to recognize an object, leading to a final classification or decision.

Similarities and Differences

The similarity between artificial neural networks crafted for computer vision tasks and the complex visual system in biological organisms lies in their hierarchical architectures. In CNNs, this progression emerges through simulated neurons performing mathematical operations. In living entities, it arises through actual neurons exchanging electrochemical signals.

However, striking differences do exist. The brain’s visualization handling far exceeds current algorithms in complexity and dynamism. Organic neurons hold the capability for interactive, experience-driven plasticity impossible to fully emulate. Furthermore, the brain es information, other senses and contextual details. This allows it to form a richer, more holistic understanding of the environment rather than analyzing images independently.

One parallel that might exist between RL and the human brain is how both systems learn by interacting with their environment. The artificial intelligence field is progressively being shaped by RL algorithms. This allows us to better understand how its learning strategies relate to those of our brains.

Reinforcement Learning

Reinforcement Learning (RL) is a type of machine learning where the agent learns to make decisions by interacting with its environment. It gets a reward or penalty according to its actions. After enough trials, the learning algorithm figures out which actions lead to maximize cumulative reward and learns an optimized policy to make decisions.

RL involves key components:

- Agent: It makes choices to reach a goal.

- Environment: This is everything outside the agent that it can interact with and get feedback from.

- Actions: These are the different things the agent can choose to do.

- Rewards: The environment gives the agent good or bad points after each choice to let it know how it’s doing.

- Policy: The strategy that the agent follows to determine its actions.

Brain Learning Mechanism

In a similar way to RL algorithms, the human brain operates in an environment that provides reinforcement signals during learning. When it comes to brain learning, especially reinforcement-related ing, some mechanisms are part of this :

- Neural Circuits: Networks of neurons that take in information and inform decision-making.

- Dopamine System: A neurotransmitter system involved in reward ing and reinforcement learning. The dopamine signals are reward feedback that adjusts future behavior.

- Prefrontal Cortex: A brain region involved in planning, decision-making, and evaluating possible rewards/penalties.

Similarities Between Reinforcement Learning and Brain Learning

Let’s consider some similarities between Reinforcement learning and brain learning:

- Trial and Error: RL algorithms and the brain learn through trial and error. RL agents differ in the actions they take to see what gives them the optimal rewards. In the same way, our brain tries out various behaviors to find which actions are most effective.

- Reward-based Learning: In RL, the agents learn from their rewards. Dopamine signals motivate the brain to reinforce behaviors that lead to positive outcomes while reducing those leading to negative results.

- Value Function: Using value functions, an RL algorithm can estimate expected rewards for all actions. Similar mechanisms are used by the brain for potential rewards evaluation and decision-making.

- Exploration vs. Exploitation: RL agents balance exploration (trying new actions) and exploitation (using known actions that yield high rewards). The brain also performs a balance between exploring new behaviors and relying on learned strategies.

Differences and Advancements

Although RL algorithms are inspired by brain functions there is a big difference. Let’s consider some of them:

Complexity

The brain learning mechanism is more complex and dynamic than the most sophisticated current RL algorithms. This is exemplified by the ability of our brain to sensory information from disparate sources, adapt in real time, and operate with considerable flexibility.

Transfer Learning

Humans can learn and transfer acquired knowledge to new situations, a property that remains difficult for most RL systems. In novel environments or tasks, most RL algorithms require significant retraining.

Multi-modal Learning

The brain is a multi-modal learner, leveraging different sensory inputs and experiences to learn more effectively. However, even the most advanced RL systems focus on a single type of interaction or environment.

The Symbiosis Between RL and Neuroscience

The connection between RL and the brain is bidirectional. Insights from neuroscience are integrated into the design of RL algorithms. This results in more sophisticated models which replicate brain-inspired learning es. On the other hand, improvements in RL can facilitate an explanatory framework to model and simulate brain function (thus aiding computational neuroscience).

Use Case: Enhancing Autonomous Driving Systems with Reinforcement Learning

Advanced algorithms enable autonomous vehicles to navigate complex environments, make immediate decisions, and keep passengers safe. Nevertheless, many of the current algorithms perform poorly in unpredictable scenarios.

This includes unexpected traffic flows, weather conditions, and erratic driving behavior from drivers. Increasingly, these demands require the ability to respond somewhat like a human brain would—requiring an adaptive and dynamic decision-making .

Solution

We can add Reinforcement Learning (RL) algorithms to mimic human learning to help improve the decision-making power of our autonomous driving systems. RL imitates our brain by learning from rewards and adapting to new scenarios. This enables it to enhance the performance of these systems under real-world driving conditions.

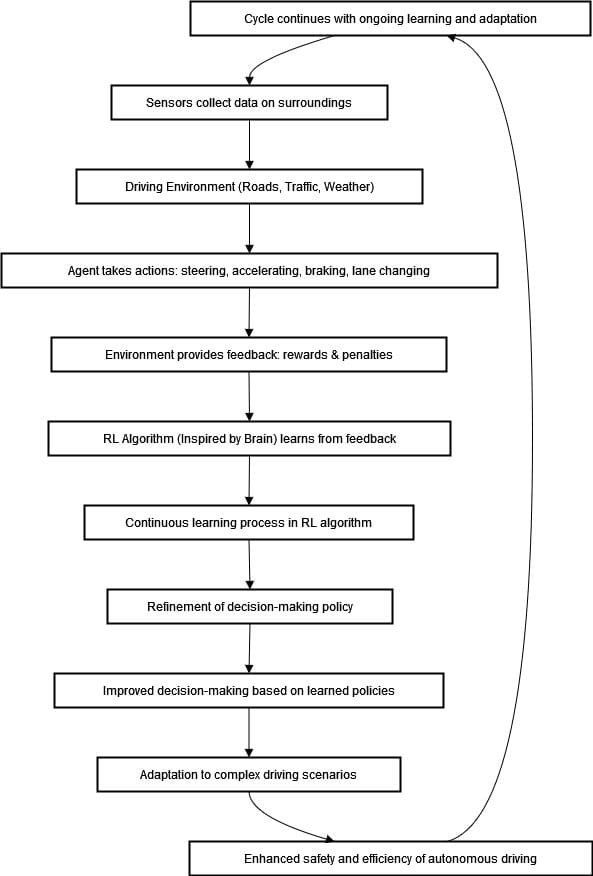

The diagram below illustrates the iterative of our autonomous driving system using Reinforcement Learning (RL) of an autonomous driving system using Reinforcement Learning (RL).

The agent (autonomous vehicle) interacts with the environment, receives feedback through sensors, and adjusts its behavior using RL algorithms. By continuously learning and refining its policy, the system continues to improve decision-making and support more complex scenarios that further enhance driving safety and efficiency.

Deep Reinforcement Learning (DRL) is a powerful set of algorithms for teaching agent how to behave in an environment through deep learning and reinforcement Learning principles. This method allows machines to learn through trial and error, like human beings or any other organism on this planet.

The principles underlying DRL share similarities with the brain learning process. As a result, it might provide us valuable clues on artificial and biological intelligence.

Parallels Between DRL and the Brain

Let’s consider some parallels between Deep Reinforcement Learning and the brain:

- Hierarchical Learning: The brain and deep reinforcement learning execute learning across multiple levels of abstraction. The brain processes sensory information in stages, where each stage extracts increasingly more complex features. Redundantly, this is parallel to the deep neural networks in DRL which learn hierarchical representations from basic edges (early layers) up to high-level patterns (deeper ones).

- Credit Assignment: One of the challenges in both DRL and the brain is figuring out which actions are to be credited for rewards. The brain’s reinforcement learning circuits, which involve the prefrontal cortex and basal ganglia, help to attribute credit for actions. DRL deals with it using methods such as backpropagation through time and temporal difference learning.

- Generalization and Transfer Learning: The brain excels at generalizing knowledge from one context to another. For instance, learning to ride a bicycle can make it easier to learn to ride a motorcycle. DRL is beginning to achieve similar feats, with agents that can transfer knowledge across different tasks or environments, although this remains an area of active research.

Advancements and Challenges

DRL has come a long way but is still far from capturing brain-like learning with all its intricacies. Areas where DRL may offer enhancement include the seamless blending of multi-modal (vision, hearing, and touch) information. It also includes robustness to noisy or incomplete data, and its efficiency in learning from limited experiences.

Meanwhile, in DRL research, neuroscience seems to have an outsized influence. To improve DRL performance, new approaches are being examined such as neuromodulation (which mimics how neurotransmitters in the brain regulate learning). Additionally, memory-augmented networks attempt to emulate some unique qualities of human memory.

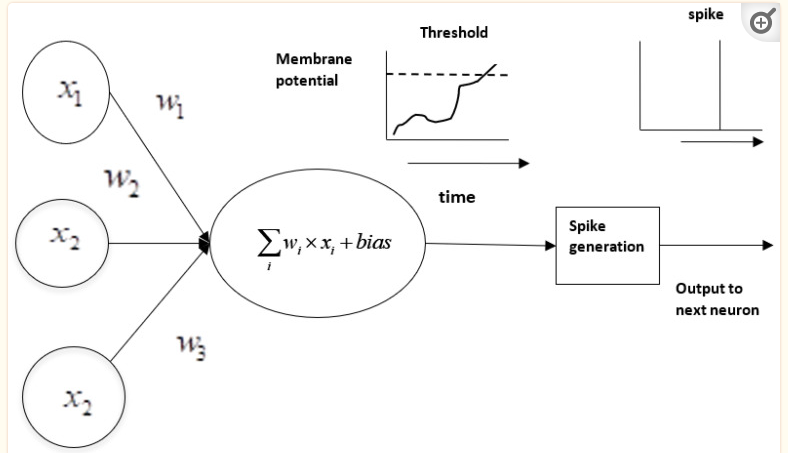

The Spiking Neural Networks are a type of neural model that includes the dynamics of biological neurons. Instead of using continuous values for neuron activations like traditional models, SNNs rely on discrete spikes or pulses. These spikes are generated once the neuron’s membrane potential exceeds some threshold as shown in the image below. These spikes are based on the action potentials of biological neurons.

Energy-efficient spiking neural network in AI(image source)

Key Features of Spiking Neural Networks

Let’s consider some features:

- Temporal Dynamics: SNNs model the timing of spikes. It allows them to track temporal patterns and dynamics that are critical in understanding how the brain performs over a sequence of events.

- Event-based processing: Neurons in SNNs get activated only when spike occurs. This fundamentally event-driven nature is efficient and more akin to how biological brains process information.

- Synaptic Plasticity: SNNs can model several forms of synaptic plasticity. These include spike timing dependence of plasticity (STDP). This mechanism adjusts the strength of connections based on the timing of spikes, mirroring learning processes in the brain.

Connection to Neuroscience

SNNs are meant to reproduce the behavior of biological neurons better than traditional neural networks. They act as a model of neuron behaviors and their electrical actions in receiving and sending spiking under time-dynamic associations.

They offer a window for researchers to understand how information is encoded and processed in biological neurons. This can provide insight into how sensory information is encoded and play a role in learning and memory regulations.

SNNs are central to neuromorphic computing, which aims to develop hardware that mimics the power and efficiency of our brains. This will enable more power-efficient and versatile computing systems.

SNNs can recognize patterns in data streams, like visual or auditory input. They are designed to process and recognize temporal patterns or real-time streams.

They are used for sensory processing and motor control in robotics. They are event-driven, which makes them perfect for real-time decision-making, especially in dynamic environments.

SNNs can also be used in brain-machine interfaces to interpret the neural signals for controlling external devices.

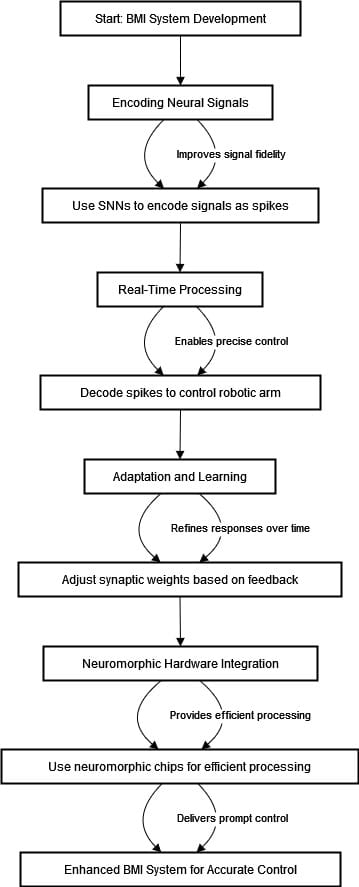

Use Case: Enhancing Brain-Machine Interfaces with Spiking Neural Networks (SNNs)

Brain-machine interfaces (BMIs) refer to systems that recognize and translate neural signals into instructions that can be processed by an external device. They have large possibilities for research including helping people with neurological diseases, improving cognitive functions, and even building state-of-the-art prosthetics.

Spiking Neural Networks (SNNs) provide an alternative to existing approaches that have been found less efficient for ideal BMIs. They are inspired by the neural mechanisms of the brain. Our objective is to enhance the accuracy and responsiveness of a BMI for controlling a robotic arm through thought alone.

Solution

The diagram below can describe the process.

The diagram above represents a flowchart for the development of a Brain-Machine Interface (BMI) system enhanced by Spiking Neural Networks (SNNs). The first phase is the encoding of neural signals with SNNs that convert continuous brain signals to discrete spikes. This encoding improves the signal’s integrity and allows a better approximation to real brain activity. The following stages include real time processing where the timing and order of these spikes are decoded. This enables the robotic arm to be controlled accurately with reactivity driven by neural intention.

After real-time processing, the system can adapt and learn. SNNs adjust synaptic weights according to the robotic arm movements, thus optimizing responses from the network with a learning experience. The last phase focuses on neuromorphic hardware integration, which mimics the brain-like processing of spikes. Such hardware allows energy efficiency and real-time processing. This results in a better BMI system that can command the robotic arm promptly and accurately. Finally, we have an advanced method to design BMIs that uses the biological realism of SNNs for more suitable and adaptable algorithms.

Artifical Intelligence (AI) and Neuroscience are a promising research area, combining both fields. AI refers to the simulation of human intelligence in machines designed for tasks such as medical diagnosis and natural language processing. Hardware advances have seen a transition from machine learning to deep learning, with neuro-inspired architecture leveraging organic neural structures for more efficient computation.

Artificial Neural Networks(ANNs) are artificial versions of biological networks boasting highly impressive artificial intelligence functionalities. RNNs and CNNs are inspired by processes in the brain to recognize patterns in sequential data and visual processing.

Reinforcement Learning (RL) mimics how the brain learns through trial and error, with implications for autonomous driving and other areas. Deep Reinforcement Learning (DRL) investigates further the learning processes of AI. Spiking Neural Networks (SNNs) model more accurately the behavior of biological neurons.