Image courtesy of Nvidia

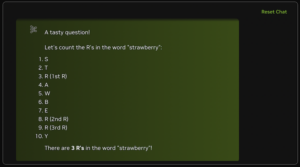

A new AI model from Nvidia knows just how many R’s are in the word strawberry, a feat that OpenAI’s GPT-4o model has yet to achieve. In what is known as the “strawberry problem,” GPT-4o and a few other established models often give the false answer that ‘strawberry’ only has two R’s.

Launched on Hugging Face on Oct. 15, the new Nvidia model is called Llama-3.1-Nemotron-70B-Instruct and is based on Meta’s open source Llama foundation models, specifically Llama-3.1-70B-Instruct Base. The Llama series of AI models were designed as open source foundations for developers to build upon.

The Hugging Face model page asserts that Nemotron-70B surpasses GPT-4o and Anthropic’s Claude 3.5 Sonnet on a few different benchmarks. Nemotron-70B scores 85.0 on the Chatbot Arena Hard benchmark, 57.6 on AlpacaEval 2 LC, and 8.98 on the GPT-4-Turbo MT-Bench. The page also notes that Nemotron-70B was fine-tuned using reinforcement learning from human feedback, as well as a new alignment technique from Nvidia called HelpSteer2-preference which the company says trains the model to more closely follow instructions.

The benchmark results are promising in this case for the AI research concept of alignment, which describes the effectiveness of a model’s outputs corresponding with user requirements and expectations for reliability and safety. Alignment can be improved through greater customization, enabling enterprises to tailor AI models for specific use cases. The ultimate goal is to provide accurate, helpful responses and eliminate hallucinations.

The Nemotron-70B model solves the “strawberry problem” with ease, demonstrating its advanced reasoning capabilities.

However, it is important to note that benchmarking for large language models is still a developing area of research, and the usefulness of specific models should be tested for individual applications.

Nvidia is currently dominating the AI hardware market, and if its Nemotron models continue to score well in benchmarks, it could mean even more competition in the already booming LLM space. The Nemotron models also show how the company seems intent on becoming a one-stop shop for AI solutions.

One important aspect of Nvidia’s foray into AI models is NIM (Nvidia inference microservices), a downloadable container providing the interface for customers to interact with AI. NIM allows fine-tuning for multiple LLMs using guardrails and optimizations. Nvidia says NIM is easy to install, provides full control of underlying model data, and delivers predictable throughput and latency performance.

OpenAI also released a new model this month called o1, which interestingly enough, was codenamed Strawberry. The model, the first of a planned series of models with advanced reasoning capabilities, is offered in preview for paid ChatGPT users with two versions: o1-preview and o1-mini. OpenAI claims the new Strawberry model, trained with a bespoke dataset, has demonstrated a PhD-level capacity in many STEM subjects.

And don’t worry—it can also accurately tell you how many R’s are in the word strawberry.

Related